What aspects does intelligent driving of new energy vehicles include? The intelligent driving system of new energy vehicles is a complex ecosystem that integrates perception, decision-making, control, communication and data processing. Its core technology can be broken down into five modules:

1. Environmental perception layer: multi-dimensional perception network

Build a 360° perception matrix through millimeter-wave radar, lidar, high-definition camera and ultrasonic sensor. Lidar accurately builds a three-dimensional point cloud map, the camera recognizes traffic signs and pedestrian postures, and the radar penetrates rain, snow, fog and haze to monitor moving objects. Multi-sensor data fusion technology can eliminate the blind spots of a single sensor and maintain environmental modeling accuracy at night or in bad weather.

2. Decision-making and planning layer: driving brain system

The computing platform equipped with multi-core AI chips runs deep learning algorithms to achieve scene recognition. The path planning module combines high-precision maps with real-time road conditions to generate obstacle avoidance trajectories; the behavior prediction model analyzes the movement intentions of pedestrians and vehicles and predicts risks 2 seconds in advance. The Transformer neural network used by Tesla FSD can already handle unprotected left turns at complex intersections.

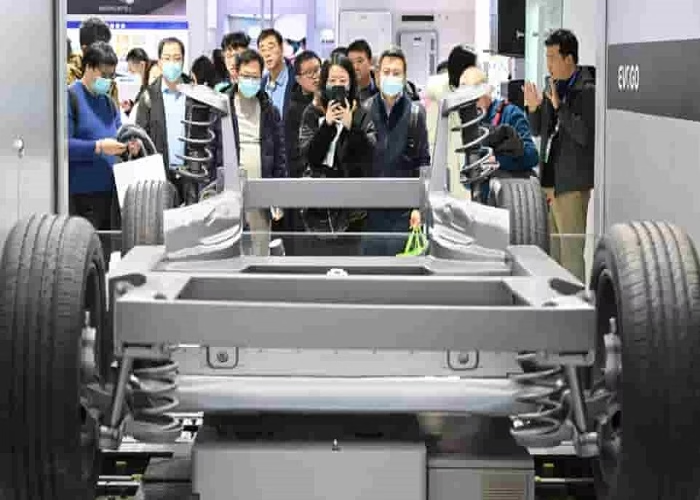

3. Execution control layer: the revolution of the drive-by-wire chassis

The electronic control unit (ECU) replaces the traditional mechanical connection to achieve full electrification control of steering, braking and driving. The redundant braking system ensures that the intelligent driving can still take over quickly when it fails, and the response speed of the wire-controlled steering is 3 times that of the traditional system. Some models already support four-wheel independent drive control, and can automatically distribute torque on slippery roads to improve stability.

4. Vehicle-cloud collaboration layer: intelligent network hub

5G-V2X technology realizes vehicle-road-cloud collaboration, and obtains traffic signals, construction warnings and other information through the roadside unit (RSU). The cloud training platform continuously updates the driving strategy, and OTA upgrades enable vehicles to obtain new functions every 3 months. Car companies such as Weilai have built a closed loop of user driving data and use fleet learning to optimize algorithms.

5. Human-computer interaction layer: evolution of the intelligent cockpit

The multimodal interaction system integrates voice, gesture and facial recognition, and the fatigue monitoring accuracy reaches 98%. AR-HUD projects navigation information onto the front windshield and displays it superimposed with the real scene. The emotional computing engine adjusts the cabin atmosphere by analyzing the passenger’s expression, making smart driving more warm.

This technical system enables new energy vehicles to break through the definition of traditional transportation tools and evolve into mobile smart spaces. With the decline in lidar costs and the improvement of autonomous driving regulations, L3 mass-produced vehicles will become the mainstream of the market in 2025, reshaping the future travel ecosystem.

Leave a Reply